The Epochalypse: It’s Y2K, But 38 Years Later

Picture this: it’s January 19th, 2038, at exactly 03:14:07 UTC. Somewhere in a data center, a Unix system quietly ticks over its internal clock counter one more time. But instead of moving forward to 03:14:08, something strange happens. The system suddenly thinks it’s December 13th, 1901. Chaos ensues.

Welcome to the Year 2038 problem. It goes by a number of other fun names—the Unix Millennium Bug, the Epochalypse, or Y2K38. It’s another example of a fundamental computing limit that requires major human intervention to fix.

By and large, the Y2K problem was dealt with ahead of time for critical systems. An amusing example of a Y2K failure was this sign at the École Centrale de Nantes, pictured on January 3, 2000. Credit: Bug de l’an 2000, CC BY-SA 3.0

The Y2K problem was simple enough. Many computing systems stored years as two-digit figures, often for the sake of minimizing space needed on highly-constrained systems, back when RAM and storage, or space on punch cards, were strictly limited. This generally limited a system to understanding dates from 1900 to 1999; when storing the year 2000 as a two-digit number, it would instead effectively appear as 1900 instead. This promised to cause chaos in all sorts of ways, particularly in things like financial systems processing transactions in the year 2000 and onwards.

The problem was first identified in 1958 by Bob Bemer, who was working on longer time scales with genealogical software. Awareness slowly grew through the 1980s and 1990s as the critical date approached and things like long-term investment bonds started to butt up against the year 2000. Great effort was expended to overhaul and update important computer systems to enable them to store dates in a fashion that would not loop around back to 1900 after 1999.

Unlike Y2K, which was largely about how dates were stored and displayed, the 2038 problem is rooted in the fundamental way Unix-like systems keep track of time. Since the early 1970s, Unix systems have measured time as the number of seconds elapsed since January 1st, 1970, at 00:00:00 UTC. This moment in time is known as the “Unix epoch.” Recording time in this manner seemed like a perfectly reasonable approach at the time. It gave systems a simple, standardized way to handle timestamps and scheduled tasks.

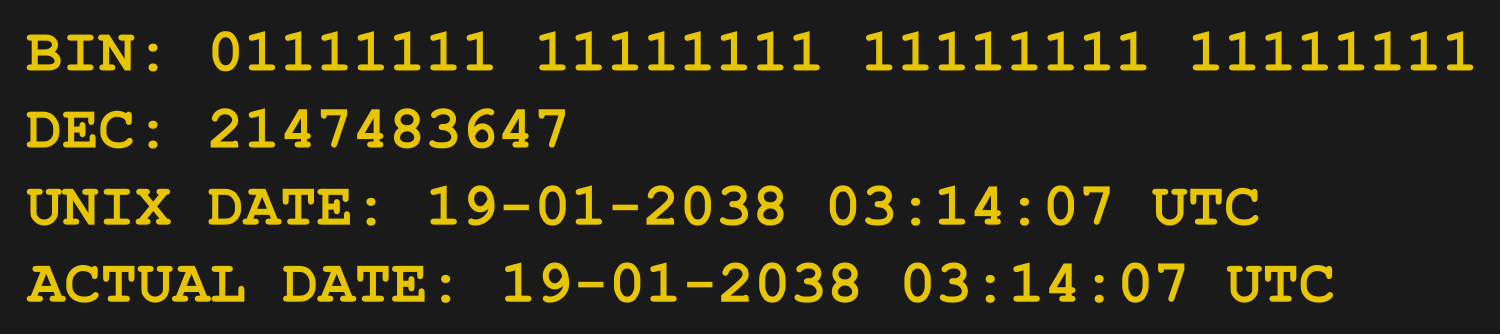

The trouble is that this timestamp was traditionally stored as a signed 32-bit integer. Thanks to the magic of binary, a signed 32-bit integer can represent values from -2,147,483,648 to 2,147,483,647. When you’re counting individual seconds, that gives you about plus and minus 68 years either side of the epoch date. Do the math, and you’ll find that 2,147,483,647 seconds after January 1st, 1970 lands you at 03:14:07 UTC on January 19th, 2038. That’s the final time that can be represented using the 32-bit signed integer, having started at the Unix epoch.

The Unix time integer immediately prior to overflow.

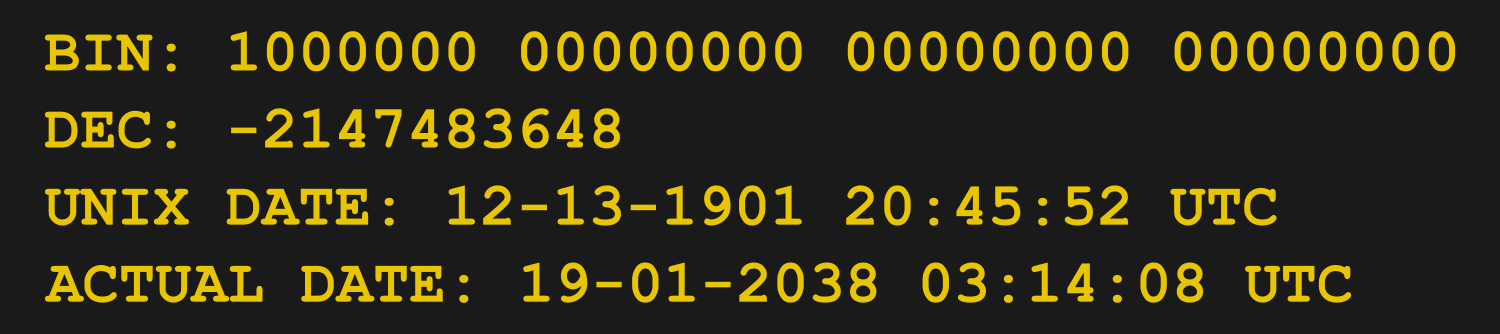

What happens next isn’t pretty. When that counter tries to increment one more time, it overflows. In two’s complement arithmetic, the first bit is a signed bit. Thus, the time stamp rolls over from 2,147,483,647 to -2,147,483,648. That translates to December 13th, 1901. In January 2038, this will be roughly 136 years in the past.

Unix time after the 32-bit signed integer has overflowed.

For an unpatched system using a signed 32-bit integer to track Unix time, the immediate consequences could be severe. Software could malfunction when trying to calculate time differences that suddenly span more than a century in the wrong direction, and logs and database entries could quickly become corrupted as operations are performed on invalid dates. Databases might reject “historical” entries, file systems could become confused about which files are newer than others, and scheduled tasks might cease to run or run at inappropriate times.

This isn’t just some abstract future problem. If you grew up in the 20th century, it might sound far off—but 2038 is just 13 years away. In fact, the 2038 bug is already causing issues today. Any software that tries to work with dates beyond 2038—such as financial systems calculating 30-year mortgages—could fall over this bug right now.

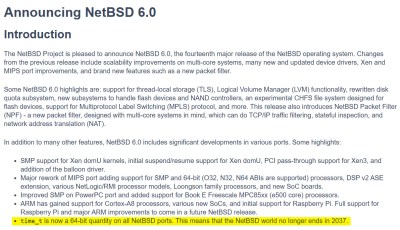

In 2012, NetBSD 6.0 introduced 64-bit Unix time across both 32-bit and 64-bit architectures. There is also a binary compatibility layer for running older applications, though they will still suffer the year 2038 problem internally. Credit: NetBSD changelog

The obvious fix is to move from 32-bit to 64-bit timestamps. A 64-bit signed integer can represent timestamps far into the future—roughly 292 billion years in fact, which should cover us until well after the heat death of the universe. Until we discover a solution for that fundamental physical limit, we should be fine.

Indeed, most modern Unix-based operating systems have already made this transition. Linux moved to 64-bit time_t values on 64-bit platforms years ago, and since version 5.6 in 2020, it supports 64-bit timestamps even on 32-bit hardware. OpenBSD has used 64-bit timestamps since May 2014, while NetBSD made the switch even earlier in 2012.

Most other modern Unix filesystems, C compilers, and database systems have switched over to 64-bit time by now. With that said, some have used hackier solutions that kick the can down the road more than fixing the problem for all of foreseeable time. For example, the ext4 filesystem uses a complicated timestamping system involving nanoseconds that runs out in 2446. XFS does a little better, but its only good up to 2486. Meanwhile, Microsoft Windows uses its own 64-bit system tracking 100-nanosecond intervals since 1 January 1601. This will overflow as soon as the year 30,828.

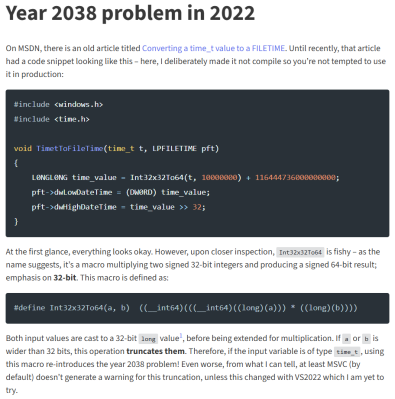

The challenge isn’t just in the operating systems, though. The problem affects software and embedded systems, too. Most things built today on modern architectures will probably be fine where the Year 2038 problem is concerned. However, things that were built more than a decade ago that were intended to run near-indefinitely could be a problem. Enterprise software, networking equipment, or industrial controllers could all trip over the Unix date limit come 2038 if they’re not updated beforehand. There are also obscure dependencies and bits of code out there that can cause even modern applications to suffer this problem if you’re not looking out for them.

In 2022, a coder called Silent identified a code snippet that was reintroducing the Year 2038 bug to new software. Credit: Silent’s blog via screenshot

The real engineering challenge lies in maintaining compatibility during the transition. File formats need updating and databases must be migrated without mangling dates in the process. For systems in the industrial, financial, and commercial fields where downtime is anathema, this can be very challenging work. In extreme cases, solving the problem might involve porting a whole system to a new operating system architecture, incurring huge development and maintenance costs to make the changeover.

The 2038 problem is really a case study in technical debt and the long-term consequences of design decisions. The Unix epoch seemed perfectly reasonable in 1970 when 2038 felt like science fiction. Few developing those systems thought a choice made back then would have lasting consequences over 60 years later. It’s a reminder that today’s pragmatic engineering choices might become tomorrow’s technical challenges.

The good news is that most consumer-facing systems will likely be fine. Your smartphone, laptop, and desktop computer almost certainly use 64-bit timestamps already. The real work is happening in the background—corporate system administrators updating server infrastructure, embedded systems engineers planning obsolescence cycles, and software developers auditing code for time-related assumptions. The rest of us just get to kick back and watch the (ideally) lack of fireworks as January 19, 2038 passes us by.

hackaday.com/2025/07/22/the-ep…